Content from Introduction

Last updated on 2025-12-16 | Edit this page

Estimated time: 60 minutes

Overview

Questions

- Why interoperability is important when dealing with research data?

- What are the three layers of interoperability?

- How can you identify if a dataset is interoperable or not?

Objectives

- Understand why interoperability matters in climate & atmospheric science

- Recognize the 3 layers: structural, semantic, technical

- Identify interoperable vs non-interoperable datasets

What is interoperability?

From the foundational article: The FAIR Guiding Principles for scientific data management and stewardship 1

Three guiding principles for interoperability are:

- I1. (meta)data use a formal, accessible, shared, and broadly applicable language for knowledge representation.

- I2. (meta)data use vocabularies that follow FAIR principles

- I3. (meta)data include qualified references to other (meta)data

Assessing how interoperable a dataset is

You receive a dataset containing global precipitation estimates for 2010–2020. Its characteristics are:

- Provided as an NetCDF file.

- Variables have short, cryptic names (e.g.,

prcp,lat,lon). - Metadata uses inconsistent units (some missing).

- Coordinates and grids are documented only in an accompanying PDF.

- The dataset includes a DOI and references two external datasets used for validation.

- No controlled vocabularies or community standards (e.g., CF, GCMD keywords) are used.

Based on the FAIR interoperability principles (I1–I3), how would you rate the interoperability of this dataset?

- High interoperability — it uses a widely supported file format and includes references to other datasets.

- Moderate interoperability — some technical elements exist, but semantic clarity and formal vocabularies are missing.

- Low interoperability — metadata and semantic descriptions do not meet I1–I3 requirements.

- Full interoperability — all three interoperability principles (I1, I2, I3) are clearly satisfied.

Correct answer: B or C depending on the level of strictness, but for educational clarity, choose C.

I1: Not satisfied (no formal/shared language for knowledge representation; heavy reliance on PDF documentation).

I2: Not satisfied (no controlled vocabularies, no standards).

I3: Partially satisfied (qualified references exist, but insufficient context). => Overall, interoperability is low.

Identify the three layers of interoperability

Semantic interoperability = meaning

Semantic interoperability ensures that data carries shared, consistent meaning across institutions and tools. This is achieved through:

- standard vocabularies

- controlled terms

- variable naming conventions

- units

- coordinate definitions

Examples include CF standard names, attributes, and controlled vocabularies. Without semantic interoperability, datasets cannot be reliably interpreted, compared, or combined.

Structural interoperability = representation

Structural interoperability ensures that data is organized, stored, and encoded in predictable, machine-actionable ways. This is achieved through:

common file formats

shared data models

consistent dimension and array structures

Examples include NetCDF, Zarr, and Parquet, which define how variables, coordinates, and metadata are stored. Structural interoperability allows tools across programming languages and platforms to read data consistently.

Technical interoperability = access

Technical interoperability ensures that data can be accessed, exchanged, and queried using standard, machine-readable mechanisms. This is achieved through:

APIs

remote access protocols

web services

cloud object storage interfaces

Examples include OPeNDAP, THREDDS and REST APIs. Technical interoperability enables automated workflows, cloud computing, and scalable analytics.

References

- European Commission (Ed.). (2004). European interoperability framework for pan-European egovernment services. Publications Office.

- European Commission. Directorate General for Research and Innovation. & EOSC Executive Board. (2021). EOSC interoperability framework: Report from the EOSC Executive Board Working Groups FAIR and Architecture. Publications Office. https://data.europa.eu/doi/10.2777/620649

Reflect back on the three guiding principles for interoperability (I1–I3)(Think-Pair-Discuss):

- I1. (meta)data use a formal, accessible, shared, and broadly applicable language for knowledge representation.

- I2. (meta)data use vocabularies that follow FAIR principles

- I3. (meta)data include qualified references to other (meta)data

Do they represent all the three layers of interoperability (structural, semantic, technical)? Explain your reasoning.

FAIR’s interoperability principles emphasize semantic interoperability, while structural and technical layers are insufficiently addressed.

For a domain like climate science—where structural standards like NetCDF-CF and technical standards like OPeNDAP matter enormously, these three guiding principles alone is not enough to guarantee practical interoperability.

Key elements of interoperable research workflows

Interoperable research workflows rely on a set of shared practices, formats, and technologies that allow data to be exchanged, understood, and reused consistently across tools and institutions. In climate and atmospheric science, these elements form the backbone of scalable, reproducible, and machine-actionable data ecosystems.

-

Community formats (NetCDF, Zarr, Parquet) provide a common structural foundation.

These formats encode data in predictable ways, with clear rules about dimensions, variables, and internal structure. NetCDF remains the dominant community standard for multidimensional geoscience data, while Zarr offers a cloud-native representation suitable for large-scale, distributed computing. Parquet complements both by providing an efficient columnar format for tabular or metadata-rich data. Using community formats ensures that tools across languages and platforms can interpret datasets consistently.

-

Standardized metadata (CF conventions) provide the semantic layer needed for meaningful interpretation.

CF conventions define variable names, units, coordinate systems, and grid attributes so that datasets from different sources “speak the same language.” This allows climate model output, satellite observations, and reanalysis products to be aligned and compared reliably.

-

Stable APIs enable technical interoperability by providing machine-readable access to data and metadata.

APIs based on HTTP and JSON allow automated workflows, programmatic data publication, and integration between repositories, processing systems, and analysis tools. A stable, well-documented API ensures that downstream services and scripts continue to function even as data collections evolve.

-

Cloud-native layouts make large datasets scalable and performant.

By storing data as independent chunks in object storage, formats such as Zarr allow parallel, lazy, and distributed access—ideal for big climate datasets, serverless workflows, and AI pipelines. This ensures that even multi-terabyte archives can be streamed efficiently without requiring full downloads.

Together, these elements work as a coordinated system: community formats provide structure, metadata provides meaning, APIs provide access, and cloud-native layouts provide scalability.

This combination is what enables truly interoperable research workflows in general bu specially to modern climate and atmospheric science. Why?

Real world barriers to data interoperability and reuse (Think-Pair-Discuss)

Think of a time when you tried to reuse a dataset that you did not produce. (5 minutes) What was the most significant barrier you encountered?

Pair discussion (5 minutes)

Share your experiences with your partner:

What specific interoperability challenges did you face (structural, semantic, technical)?

How did you try to overcome them?

Would adherence to FAIR I1–I3 principles have helped? If so, how?

Group debrief (5 minutes)

Discuss as a group:

Common obstacles

Whether these were structural, semantic, or technical

How such challenges could be prevented if data producers had designed the dataset with interoperability in mind (e.g., CF conventions, persistent identifiers, shared vocabularies, formal metadata languages)

Examples of barriers to reuse datasets might include:

Missing metadata

Non-standard units or unclear variable names

File formats you could not easily open

Access restrictions or unstable URLs

Large data volumes and inefficient download workflows

Difficulty aligning datasets from multiple institutions

Lack of documentation on coordinate systems or time conventions

Inconsistent versions or unclear provenance

This discussion sets up the motivation for the rest of the workshop: practical, hands-on methods to make interoperable data using real tools such as NetCDF, CF conventions, and OPeNDAP.

Specific data challenges in the climate & atmospheric sciences

Heterogeneous data origins: Climate research integrates satellite retrievals, weather models, climate simulations, in-situ sensors, radar, lidar, aircraft measurements, and reanalysis datasets—each with its own structure, conventions, and processing workflows.

Different spatial and temporal resolutions: Satellite images may be daily or hourly at 1 km resolution, while climate models may provide monthly or daily outputs on coarse grids; combining them requires consistent metadata and alignment.

Multiple file formats and data models: Data may come as GRIB, NetCDF, GeoTIFF, HDF5, CSV, or Zarr, each with different structural assumptions that affect processing and interpretation.

Inconsistent metadata quality: Missing units, inconsistent variable names, unclear coordinate systems, or non-standard attributes are frequent issues—making semantic interoperability a major challenge.

Large data volume and velocity: Earth observation missions (e.g., Sentinel, GOES), reanalysis products (ERA5), and high-resolution climate simulations produce terabytes to petabytes of data, making efficient, interoperable access necessary.

Different access mechanisms and services: Data are distributed across portals using APIs, OPeNDAP servers, cloud object storage, FTP, THREDDS catalogs, proprietary download tools, or manual interfaces—requiring technical interoperability to automate workflows.

Versioning and reproducibility issues: Climate datasets evolve frequently (e.g., reprocessed satellite series, new CMIP6 versions), and without stable identifiers or catalog metadata, reproducibility becomes difficult across institutions.

Need for multi-model and multi-dataset comparisons: Studies such as model evaluation, bias correction, and data assimilation depend on aligning diverse datasets that were never originally designed to work together.

Why interoperability is essential

Interoperability is essential in climate and atmospheric science because researchers routinely work with multiple heterogeneous datasets that were never originally designed to work together. By ensuring that data are described consistently, stored in predictable structures, and accessed through standard mechanisms, interoperability makes it possible to combine and reuse data efficiently across research workflows.

First, interoperability enables data reuse: when datasets follow shared metadata conventions and formats, researchers can easily understand what variables represent, how they were produced, and how they can be used in new contexts. This avoids redundant effort and saves time across research groups.

Second, interoperability enables integration across sources—for example, combining model output with satellite observations, radar measurements, in-situ sensors, and reanalysis datasets. These data sources differ in resolution, structure, access method, and semantics; without shared standards, aligning them becomes difficult or impossible.

Third, interoperability reduces friction in data pipelines. Standardized formats, consistent metadata, and machine-actionable APIs allow workflows to run smoothly without manual cleaning, renaming, or restructuring. This is especially critical when handling large, frequently updated datasets typical in climate research.

Finally, interoperability is required for automation, AI, dashboards, and multi-disciplinary science. Machine learning pipelines, automated monitoring systems, and interactive applications rely on consistent, accessible, and machine-readable data. Without interoperability, these tools break or require extensive custom engineering.

In short, interoperability is what makes the diverse, high-volume data ecosystem of climate and atmospheric science usable, scalable, and scientifically trustworthy.

True/False or Agree/Disagree with discussion afterwards

- “As long as data are open access, they are interoperable.”

- “Metadata standards help ensure interoperability.”

- “As long as data is using an open standard format is interoperable”

F,T,F

This exercise is for discussion in Plenum nad it can serves as a good link to the next section

Discuss with you peer:

Participants inspect a small dataset and answer:

- dataset 1: https://opendap.4tu.nl/thredds/dodsC/IDRA/2019/01/02/IDRA_2019-01-02_quicklook.nc.html

- dataset 2: https://swcarpentry.github.io/python-novice-inflammation/data/python-novice-inflammation-data.zip

- dataset 3: https://opendap.4tu.nl/thredds/dodsC/data2/uuid/9604a1b0-13b6-4f23-bd6c-bb028591307c/wind-2003.nc.html

Participants should identify whether the dataset is interoperable based on the three layers discussed (structural, semantic, technical).

dataset 1: Interoperable - Structure: NetCDF format with clear dimensions and variables. - Metadata: CF-compliant attributes, standard names, units. - Access: OPeNDAP protocol for remote access. dataset 2: Not interoperable - Structure: CSV files with ambiguous column headers. - Metadata: Lacks standardized metadata, unclear variable meanings. - Access: Manual download, no API or remote access. dataset 3: Not Interoperable - Structure: NetCDF format but missing CF compliance. - Metadata: Inconsistent or missing units, unclear variable names. - Access: OPeNDAP protocol

Interoperability ensures that data can be understood, combined, accessed, and reused across tools, institutions, and workflows with minimal manual intervention.

Interoperability operates at three complementary layers:structural (how data is encoded and organized),semantic (how data is described and interpreted), and technical (how data is accessed and exchanged).

The FAIR interoperability principles I1–I3 primarily address the semantic layer. They provide essential guidance on shared metadata languages, vocabularies, and references, but they do not fully cover structural and technical interoperability.

In climate and atmospheric science, all three layers are required for practical reuse. Structural standards (e.g., NetCDF, Zarr), semantic conventions (e.g., CF), and technical mechanisms (e.g., APIs, OPeNDAP, THREDDS) must work together.

Many real-world barriers to reuse datasets (unclear metadata, missing units, inconsistent coordinate systems, incompatible file formats, unstable access mechanisms) are failures of one or more interoperability layers.

Interoperable research workflows rely on established community formats, standardized metadata conventions, stable access protocols, and scalable cloud-native layouts that allow large heterogeneous datasets to be aligned, streamed, and analysed consistently.

Interoperability is essential in climate science because datasets come from diverse sources (models, satellites, sensors, reanalysis) and must be combined into integrated analyses that are reproducible and machine-actionable.

Wilkinson, M. D., Dumontier, M., Aalbersberg, I. J., Appleton, G., Axton, M., Baak, A., … & Mons, B. (2016). The FAIR Guiding Principles for scientific data management and stewardship. Scientific data, 3(1), 1-9.↩︎

Content from Structural interoperability

Last updated on 2025-12-16 | Edit this page

Estimated time: 45 minutes

Overview

Questions

What is structural interoperability?

How do open standards and community governance enable structurally interoperable research data?

Which structural expectations must a data format satisfy to support automated, machine-actionable workflows?

Which open standards are commonly used in climate and atmospheric sciences to achieve structural interoperability?

What is NetCDF’s data structure?

Objectives

Explain structural interoperability in terms of data models, dimensions, variables, and metadata organization.

Identify the role of open standards and community-driven governance in ensuring long-term structural interoperability.

Describe the key structural expectations required for automated alignment, georeferencing, metadata interpretation, and scalable analysis.

Analyze a NetCDF file to identify its core structural elements (dimensions, variables, coordinates, and attributes).

What is structural interoperability?

Structural interoperability refers to how data are organized internally, their dimensions, attributes, and encoding rules. It ensures that different tools can parse and manipulate a dataset without prior knowledge of a custom schema or ad-hoc documentation.

In particular for the field of climate & atmospheric sciences, for a dataset to be structurally interoperable:

- Arrays must have known shapes and consistent dimension names (e.g., time, lat, lon, height).

- Metadata must follow predictable rules (e.g., attributes like units, missing_value, long_name).

- Coordinates must be clearly defined, enabling slicing, reprojection, or aggregation.

Structural interoperability answers the question: Can machines understand how this dataset is structured without human intervention?

Structural interoperability relies on open standards

To attain structural interoperability, two key aspects are essential: machine-actionability and longevity. Open standards ensure that these two aspects are met. An open standard is not merely a published file format. From the perspective of structural interoperability, an open standard guarantees that:

The data model is publicly specified (arrays, dimensions, attributes, relationships)

The rules for interpretation are explicit, not inferred from software behavior

The specification can be independently implemented by multiple tools

The standard evolves through transparent versioning, avoiding silent breaking changes

These properties ensure that a dataset remains structurally interpretable even when:

- The original software is no longer available

- The dataset is reused in a different scientific domain

- Automated agents, rather than humans, perform the analysis

Usually these standards are adopted and maintained by a non-profit organization and its ongoing development is driven by a community of users and developers on the basis of an open-decision making process

Formats such as NetCDF, Zarr, and Parquet emerge from broad communities like:

- Unidata (NetCDF)

- Pangeo (cloud-native geoscience workflows)

- Open Geospatial Consortium (OGC) & World Meteorological Organization (WMO)

These groups define formats that encode stable, widely adopted structural constraints that machines and humans can rely on.

Structural Interoperability is about Data Models, not just data formats

Structural interoperability does not emerge from file extensions alone. It is enforced by an underlying data model that defines:

What kinds of objects exist (arrays, variables, coordinates)

How those objects relate to one another

Which relationships are mandatory, optional, or forbidden

Community standards such as NetCDF and Zarr succeed because they define and constrain a formal data model, rather than allowing arbitrary structure.

This is why structurally interoperable datasets can be:

Programmatically inspected

Automatically validated

Reliably transformed across tools and platforms

Structural expectations encoded in open standards (Think-Pair-Discuss)

Which structural expectation are required for:

- automated alignment of datasets along common dimensions (e.g., time, latitude, longitude)

- georeferencing and spatial indexing

- correct interpretation of units, scaling factors, and missing values

- efficient analysis of large datasets using chunking and compression

Automated alignment of datasets along common dimensions , (e.g., time, latitude, longitude)

- Required structural expectation: Named, ordered dimensions

Explanation: Automated alignment requires that datasets explicitly declare:

- Dimension names (e.g.,

time,lat,lon) - Dimension order and length

Open standards such as NetCDF and Zarr enforce explicit dimension definitions. Tools like xarray rely on this structure to automatically align arrays across datasets without manual intervention.

Georeferencing and spatial indexing

- Required structural expectations: Coordinate variables, Explicit relationships between coordinates and data variables

Explanation: Georeferencing depends on the structural presence of coordinate variables that:

- Map array indices to real-world locations

- Are explicitly linked to data variables via dimensions

This enables spatial indexing, masking, reprojection, and subsetting. Without declared coordinates, spatial operations require external knowledge and break structural interoperability.

Correct interpretation of units, scaling factors, and missing values

- Required structural expectation: Consistent attribute schema

Explanation: Open standards require metadata attributes to be:

- Explicitly declared

- Attached to variables

- Machine-readable (e.g.,

units,scale_factor,add_offset,_FillValue)

This allows tools to correctly interpret numerical values without relying on external documentation. While the meaning of units is semantic, their presence and location are structural requirements.

Efficient analysis of large datasets using chunking and compression

- Required structural expectation: Chunking and compression mechanisms defined by the standard

Explanation: Formats such as NetCDF4 and Zarr define:

- How data is divided into chunks

- How chunks are compressed

- How chunks can be accessed independently

This structural organization enables parallel, lazy, and out-of-core computation, which is essential for large-scale climate and atmospheric datasets.

If you want to deepen discussion, ask participants:

- Which of these expectations would still work if metadata were incomplete?

- Which expectations primarily benefit machines rather than humans?

This naturally leads into the next section on NetCDF structure and the boundary between structural and semantic interoperability.

NetCDF

Once upon a time, in the 1980s at University Corporation for Atmospheric Research (UCAR) and Unidata, a group of researchers came together to address the need for a common format for atmospheric science data. Back then the group of researchers committed to this task asked the following question: how to effectively share my data? such as it is portable and can be read easily by humans and machines? the answer was NetCDF.

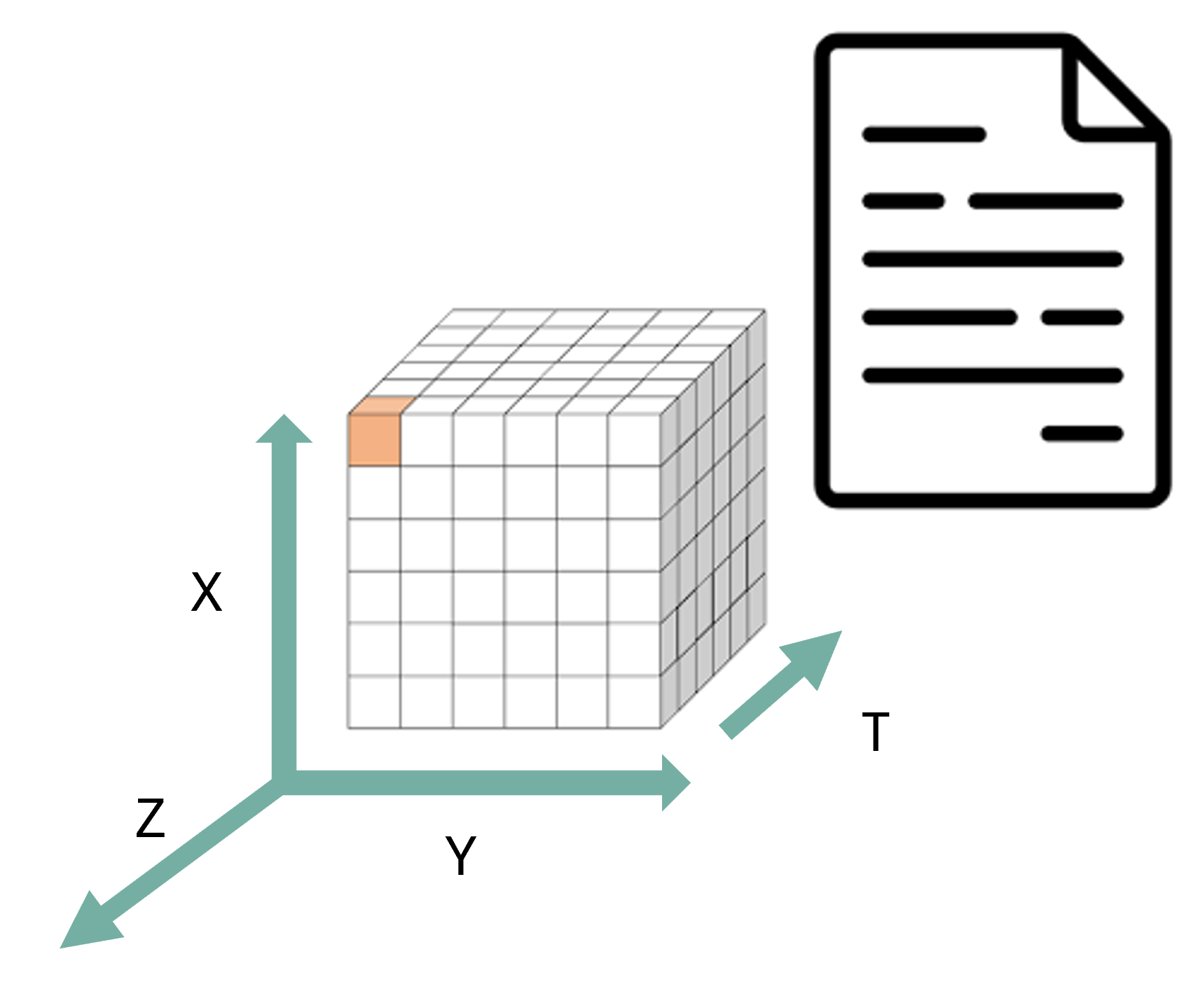

NetCDF (Network Common Data Form) is a set of software libraries and self-describing, machine-independent data formats that support the creation, access, and sharing of array-oriented scientific data.

Self-describing structure (Structural layer):

- One file carries data and metadata, usable on any system. This means that there is a header which describes the layout of the rest of the file, in particular the data arrays, as well as arbitrary file metadata in the form of name/value attributes.

- Dimensions define axes (time, lat, lon, level).

- Variables store multidimensional arrays.

- Coordinate variables describe spatial/temporal context.

- Attributes are metadata per variable, and for the whole file.

- Variable attributes encode units, fill values, variable names, etc.

- Global attributes provide dataset-level metadata (title,creator, convention,year).

- No external schema is required, the file contains its own structural metadata.

Other useful open standards used in the field

Zarr (cloud-native, chunked, distributed)

Zarr is a format designed for scalable cloud workflows and interactive high-performance analytics.

Structural characteristics:

- Stores arrays in chunked, compressed pieces across object storage systems.

- Supports hierarchical metadata similar to NetCDF.

- Uses JSON metadata that tools interpret consistently (e.g., xarray → Mapper).

- Works seamlessly with Dask for parallelized computation.

Why it matters:

- Enables analysis of petabyte-scale datasets (e.g., NASA Earthdata Cloud).

- Structural rules are community-governed (Zarr v3 specification).

- Allows fine-grained access—no need to download entire files.

Zarr is becoming central for cloud-native structural interoperability.

Parquet (columnar, schema-driven)

Parquet offers efficient tabular storage and is ideal for:

- Station data

- Metadata catalogs

- Observational time series

Structural characteristics:

- Strongly typed schema ensures column-level consistency.

- Supports complex types but enforces stable structure.

- Columnar layout enables fast filtering and analytical queries.

In climate workflows:

- Parquet is used for catalog metadata in Intake, STAC, and Pangeo Forge.

- Structural predictability supports machine-discoverable datasets.

Parquet complements NetCDF/Zarr, addressing non-array use cases.

Identify the structural elements in a NetCDF file

Please perform the following steps to explore the structural elements of a NetCDF file: 1. Open a NetCDF file : https://opendap.4tu.nl/thredds/dodsC/IDRA/2019/01/02/IDRA_2019-01-02_quicklook.nc.html 2. Identify variable metadata 3. Identify the global attributes 4. Identify the dimensions and coordinate variables

- Structural interoperability concerns how data are organized, not what they mean.

- Open standards are essential for machine-actionability and long-term reuse.

- Structural interoperability is enforced by data models, not file extensions.

- Structural interoperability is enforced by data models.

- Standards maintained by communities (e.g. Unidata, Pangeo, OGC/WMO) encode shared structural contracts that tools and workflows can reliably depend on.

- NetCDF exemplifies structural interoperability for multidimensional geoscience data.

Content from Semantic interoperability

Last updated on 2025-12-17 | Edit this page

Estimated time: 20 minutes

Overview

Questions

What is semantic interoperability ?

Why is structural interoperability alone insufficient for meaningful data reuse?

How do community metadata conventions (e.g. CF) encode shared scientific meaning?

What does it mean for a NetCDF file to be “CF-compliant”?

Objectives

By the end of this episode, learners will be able to:

Distinguish between structural and semantic interoperability.

Explain why shared vocabularies and conventions are required for machine-actionable meaning.

Describe the role of the CF Conventions in climate and atmospheric sciences.

Apply a CF compliance checker to evaluate how CF-compliant NetCDF files are.

What is semantic interoperability?

Semantic interoperability concerns shared meaning.

A dataset is semantically interoperable when machines and humans interpret its variables in the same scientific way, without relying on informal documentation, personal knowledge, or context outside the data itself. Semantic interoperability answers the question:

“Do we agree on what this data represents?”

This goes beyond structure. Two datasets may both contain a variable

named temp, stored as a float array over time and space,

yet represent:

air temperature at 2 m

sea surface temperature

model potential temperature

sensor voltage converted to temperature

Are these the same quantity? No.

Without semantic constraints, machines cannot reliably compare, combine, or reuse such data.

Why structural interoperability is not enough

Structural interoperability ensures that:

dimensions are explicit,

arrays align,

metadata is machine-readable.

However, structure does not define meaning.

Example:

A NetCDF file may be perfectly readable by xarray. Variables may have

dimensions (time, lat, lon).

Units may be present. Yet machines still cannot know: what physical quantity is represented, at which reference height or depth, whether values are comparable across datasets.

This gap is addressed by semantic conventions, not file formats.

Semantic interoperability via CF Conventions

The Climate and Forecast (CF) Conventions define a shared semantic layer on top of NetCDF’s structural model.

CF specifies, among others:

Standard names: Controlled vocabulary linking variables to formally defined physical quantities (e.g.

air_temperature,sea_surface_temperature)Units: Enforced through UDUNITS-compatible expressions

Coordinate semantics: Meaning of vertical coordinates, bounds, and reference systems

Grid mappings and projections: Explicit spatial reference information

Relationships between variables: For example, how bounds, auxiliary coordinates, or cell methods relate to data variables

By adhering to CF, datasets become semantically interoperable:

A NetCDF file without CF can be structurally interoperable, but it is semantically ambiguous.

A NetCDF file with CF becomes interpretable across tools, domains, and time.

Community governance and ecosystem alignment

Semantic interoperability is not achieved by individual researchers alone.

CF Conventions are:

Developed and maintained by a broad scientific community

Reviewed, versioned, and openly governed

Adopted by major infrastructures and workflows

This shared semantic contract enables: Cross-dataset comparison, automated discovery and filtering and large-scale synthesis and reuse.

Semantic interoperability . True or False?

Indicate whether each statement is True or False, and justify your answer.

A NetCDF file with dimensions, variables, and units is semantically interoperable by default.

CF standard names allow machines to distinguish between different kinds of “temperature”.

Semantic interoperability mainly benefits human readers, not automated workflows.

Two datasets using the same CF standard name can be compared without manual interpretation.

Semantic interoperability can be achieved without community-agreed conventions.

False. Structure alone does not define meaning; semantics require controlled vocabularies and conventions.

True . CF standard names explicitly encode physical meaning, not just labels.

False. Semantic interoperability is essential for automated discovery, comparison, and integration.

True . Shared semantics enable machine-actionable comparability (subject to resolution and context).

False. Semantic interoperability depends on community agreement, not individual interpretation.

CF compliance

A NetCDF file is considered CF-compliant when it adheres to the rules and conventions defined by the CF standard.

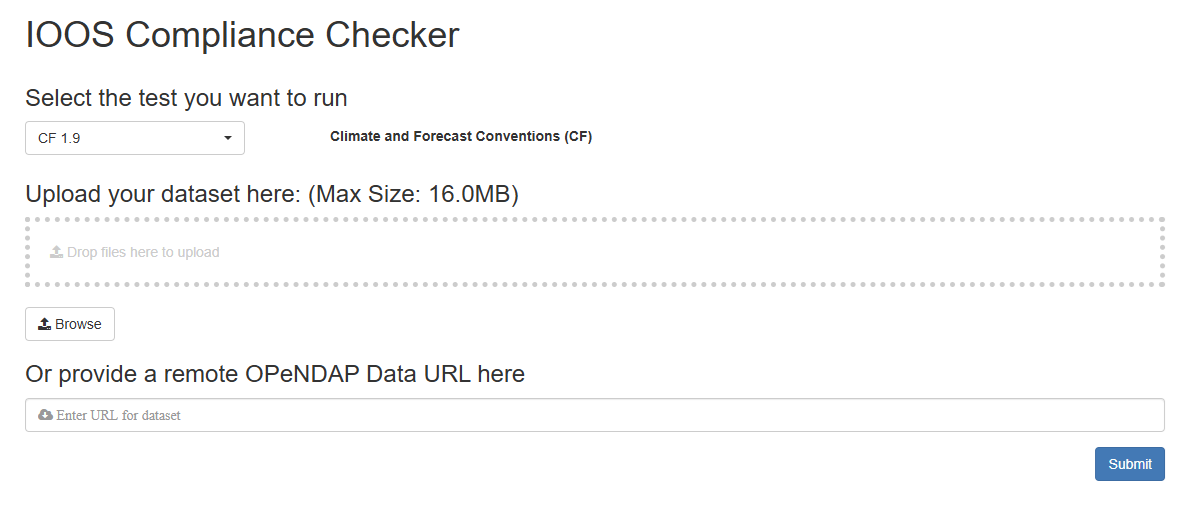

The tool IOOS Compliance Checker is a web application that evaluates NetCDF files against CF Conventions based on Python. Its source code is available on GitHub.

Try the Compliance Checker

-

Go to the IOOS Compliance Checker.

- Explore the interface and options.

-

Provide a valid remote OPenNDAP url

- A valid url (endpoint to the dataset, not a html page) :

https://opendap.4tu.nl/hredds/dodsC/+IDRA/year/month/day/filename.nc - For example, use this sample dataset:

https://opendap.4tu.nl/thredds/dodsC/IDRA/2009/04/27/IDRA_2009-04-27_06-08_raw_data.nc - Wrong url (html page):

https://opendap.4tu.nl/thredds/catalog/IDRA/2009/04/27/catalog.html?dataset=IDRA_scan/2009/04/27/IDRA_2009-04-27_06-08_raw_data.nc

- A valid url (endpoint to the dataset, not a html page) :

Click on Submit.

Review and download the report

Semantic interoperability ensures that data variables have shared, machine-actionable scientific meaning, not just readable structure.

Structural interoperability is necessary but insufficient for reliable comparison and reuse across datasets.

The CF Conventions provide a community-governed semantic layer on top of NetCDF through standard names, units, and coordinate semantics.

CF compliance enables automated discovery, comparison, and integration in climate and atmospheric science workflows.

Semantic interoperability depends on community-agreed conventions, not on file formats or variable names alone.

Content from Technical interoperability: Streaming protocols

Last updated on 2025-11-18 | Edit this page

Estimated time: 40 minutes

Overview

Questions

- What is the DAP protocol?

- Why is DAP an example of interoperability?

- How to access a NetCDF file using OpenDAP interface, via DAP protocol?

- How to read a NetCDF file programatically, using DAP protocol - with

open_datasetfromxarrayPython library. - How to explore and manipulate a NetCDF file programatically.

Objectives

- Understand why DAP is an interoperable protocol.

- Know how to access and read a NetCDF file using DAP protocol.

Content

✔ Streaming protocols = Technical interoperability OPeNDAP / DAP allow:

✔ Why this matters • Enables scalable workflows (ERA5, ORAS5, CMIP6) • Facilitates AI training pipelines

Hands-on Exercise (Python) • Use Python client to read remote NetCDF via OPeNDAP • Perform a small selection (time window, variable subset) • Compare cost vs full file download

Exercise: TRUE or FALSE?

Is this statement true or false? > The

xarray.open_data() function you used, has downladed the

dataset file to your computer. Whay do you think so?

No, the data has been accessed with the DAP protocol, which allows to explore and summarise the dimensions of the data, but they have not been downloaded to the computer.

- OPeNDAP (DAP) is a protocol that enables remote access to subsets of scientific datasets without downloading entire files, exemplifying technical interoperability.

- Using OPeNDAP allows efficient server-side subsetting and slicing of large NetCDF files, facilitating scalable workflows for large climate datasets.

- Programmatic access to NetCDF files via OPeNDAP can be achieved using libraries like xarray, enabling efficient data exploration and manipulation.

Content from Technical interoperability: API

Last updated on 2025-12-23 | Edit this page

Estimated time: 120 minutes

Overview

Questions

- What is a REST API?

- Why API are an example of interoperability?

- How to create a draft dataset using the 4TU.ResearchData Rest API?

- How to submit for review a dataset using the 4TU.ResearchData Rest API

Objectives

- Understand why APIs are interoperables protocols?

- Know how to submit data to a data repository via its API.

Content

✔ APIs = technical interoperability layer

APIs enable: • Automated data retrieval • Publication • Distributed pipelines • Machine-to-machine workflows • Cross-institutional integration APIs are to infrastructures what CF conventions are to NetCDF: → shared semantics + shared rules.

✔ Key API concepts • HTTP • REST verbs (GET/POST/PUT/DELETE) • JSON as structural metadata • Stable identifiers • Versioning

✔ What APIs must comply with • HTTP as transport • Predictable URL patterns • JSON serialization • Metadata standards (schema.org, DCAT, CF, ISO19115) • Self-describing endpoints

Hands-on Exercises 1. Query datasets via 4TU API 2. Retrieve metadata and interpret JSON 3. Publish a dataset programmatically 4. Update metadata 5. Integration workflow:

Sensor API → enrich metadata (CF-like) → publish to 4TU Reinforces technical interoperability.

- APIs (Application Programming Interfaces) are interoperable protocols that enable machine-to-machine communication, allowing automated data retrieval, publication, and integration across distributed systems.

- REST APIs use standard HTTP methods (GET, POST, PUT, DELETE) and JSON serialization to provide predictable and self-describing endpoints, facilitating seamless interaction with data repositories.

- By adhering to established metadata standards and versioning practices, APIs ensure consistent and reliable access to datasets, supporting scalable and interoperable workflows in climate science.

Content from Cloud-Native Layouts

Last updated on 2025-11-18 | Edit this page

Estimated time: 45 minutes

Overview

Questions

- What are cloud-native data layouts?

- Why are cloud-native layouts important for interoperability in climate science data?

- What are key technologies for cloud-native data layouts?

Objectives

- Understand the concept of cloud-native data layouts.

- Recognize the importance of cloud-native layouts for interoperability in climate science data.

- Identify key technologies used in cloud-native data layouts.

Content ✔ What is a cloud-native layout? • Chunked, object-based storage • Parallel IO • Lazy loading • Works natively with Dask, Ray, Spark, Pangeo ✔ Why cloud-native matters for climate datasets • ERA5, CMIP6, GOES are TB/PB scale • Repeated slicing for ML • Cloud-native = scalable structural interoperability ✔ Key technologies • Zarr • Kerchunk • Parquet • Example projects: ERA5-to-Zarr, GOES-16 COG/Zarr ✔ NetCDF vs cloud-native • NetCDF = interoperable but not cloud-native • Zarr = cloud-native but depends on semantic standards (CF)

Hands-on Exercise: Convert NetCDF → Virtual Zarr Using Kerchunk: NetCDF → Zarr reference → open with xarray → inspect CF metadata

Reinforces structural interoperability.

- Cloud-native data layouts, such as Zarr and Parquet, are designed for efficient storage and access in cloud environments, enabling scalable and parallel processing of large climate datasets.

- Cloud-native layouts enhance interoperability by allowing seamless integration with distributed computing frameworks like Dask, Ray, and Spark, facilitating efficient data slicing and analysis.

- Key technologies for cloud-native data layouts include Zarr for chunked storage, Kerchunk for virtual datasets, and Parquet for tabular data, all of which support scalable and interoperable workflows in climate science.

Content from Interoperable Infrastructure in the AI Era

Last updated on 2025-11-18 | Edit this page

Estimated time: 20 minutes

Overview

Questions

- What are the requirements for an AI-ready data infrastructure in climate science?

- Why is interoperability crucial for AI applications in climate science?

- What are the key elements of an AI-ready interoperable data infrastructure?

Objectives

- Understand the requirements for an AI-ready data infrastructure in climate science.

- Recognize the importance of interoperability for AI applications in climate science.

- Identify the key elements of an AI-ready interoperable data infrastructure.

In this episode you will learn about :

AI needs

- Large-scale multidimensional datasets

- Consistent CF metadata

- Chunked cloud-native formats

- STAC-like discoverability

- Stable APIs for pipeline automation

Challenges

- Data fragmentation

- Lack of standardization

- FAIR gaps

- Poorly documented repositories

Key elements of an AI-ready infrastructure

- Standarized metadata (e.g CF convention)

- Community formats

- Cloud-native layouts

- Stable and well documented APIs

- STAC catalogs

- Versioning & identifiers

Interoperability enables AI-ready infrastructure

Interoperability determines:

- Efficient access

- Reproducibility

- Integrability

- Trust in results

Examples

- FAIR-EO (FAIR Earth Observations) (https://oscars-project.eu/projects/fair-eo-fair-open-and-ai-ready-earth-observation-resources)

- AI-ready data infrastructure requires large-scale multidimensional datasets, consistent CF metadata, chunked cloud-native formats, STAC-like discoverability, and stable APIs for pipeline automation.

- Interoperability is crucial for AI applications in climate science as it enables efficient data access, reproducibility of results, integrability of diverse datasets, and trust in AI-driven insights.

- Key elements of an AI-ready interoperable data infrastructure include adherence to community formats, cloud-native layouts, stable APIs, comprehensive data catalogs, and robust versioning and identifier systems.